Microsoft Azure App Service: My Personal Experience

In the course of my journey in software development and management, I have had the opportunity to manage large scale enterprise application using different cloud infrastructure technologies (Microsoft Azure cloud service, Amazon Web Services (AWS)).

Amongst the many available, I had first-hand experience working with Microsoft Azure Service, which I will refer to as Azure for the purpose of this write up. Today I will be writing on my experience, lessons I learnt and how best to set up your App service for proper and excellent scaling of your enterprise application. Let’s dive right in.

Azure App Service:

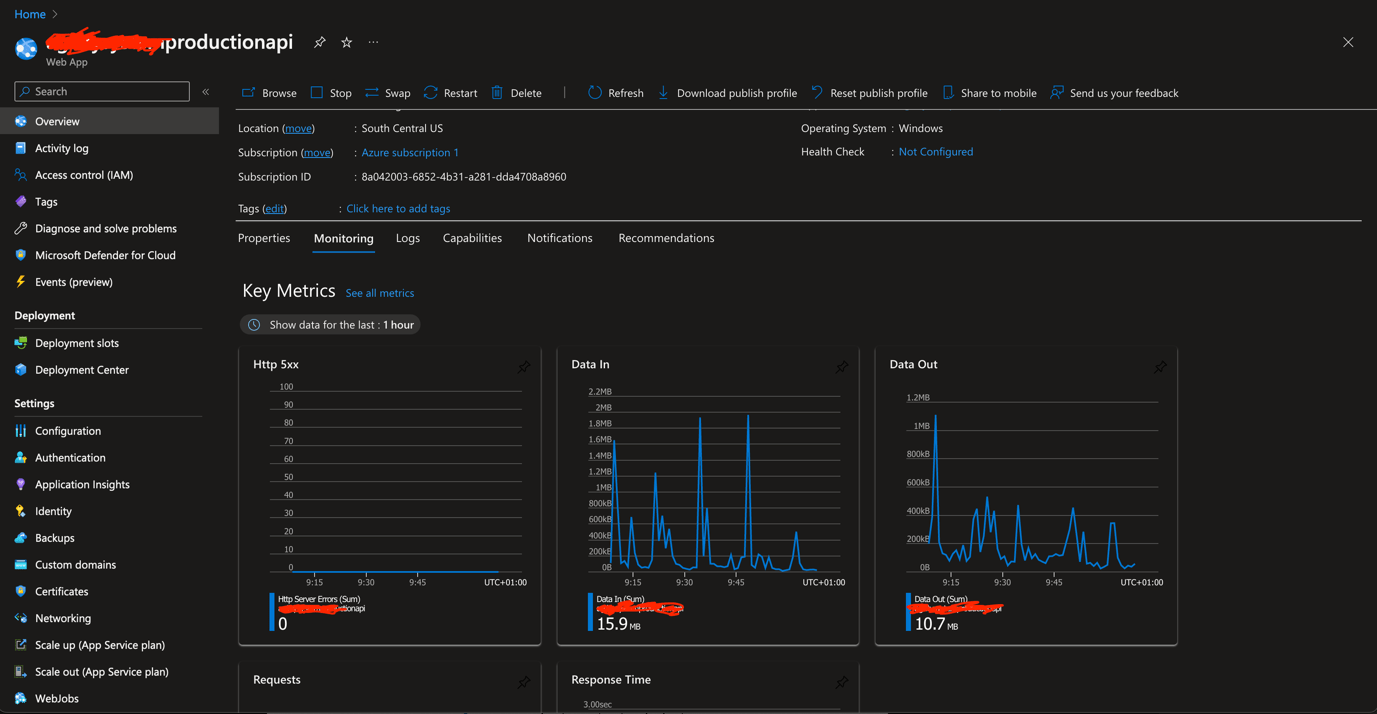

Applications hosted on Azure are generally hosted as app services. After a solution or an application has been developed in any language -particularly in C# – they are hosted on Azure using Azure App Service.

As an infrastructure engineer, you are therefore expected to create an app service for your developers to deploy their applications to for it to be available for use. Creating an app service is very easy, just carefully follow the instructions presented to you by Azure, on your organization’s Azure Dashboard, and you have your app service.

The task here is how to configure your app service in a good way so it can scale efficiently, effectively and fast as your applications is being used after deployment and go-live. You may also need to consider the cost implications as you set up this app service.

CONFIGURING YOUR AZURE APP SERVICE:

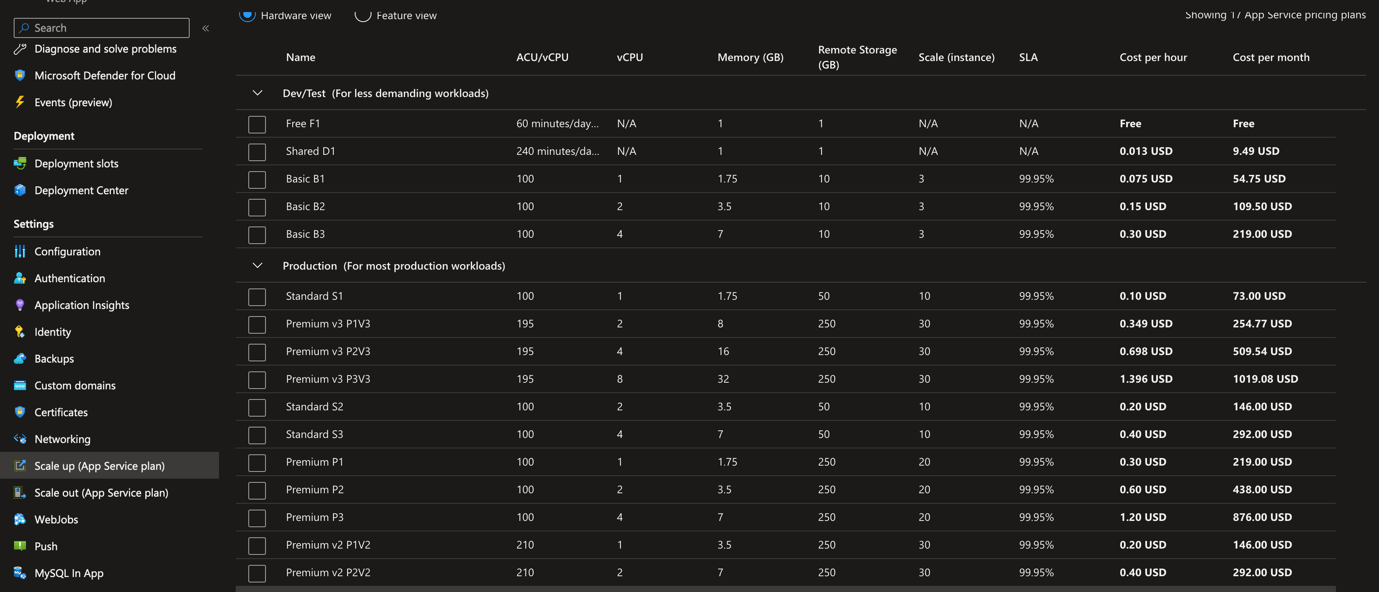

Configuring your app service actually begins with selecting the proper app service plan that will suit it. This needs to be carefully selected based on the following:

- How large your application is or will be over time

2. The load that your application will be handling based on number of users, frequency of use, the number of API calls and database calls your application will be making per time, etc

3. The financial budget of your organization vis-a-vis the expected load it is expected to handle.

After putting all these together, you should then be able to decide on the app service plan to use based on your requirements above and the capacity of the different app service plan provided to you by azure when setting up your App Service from the azure portal.

SCALING YOUR APP SERVICE PLAN:

Azure also provides you with a way to scale your created and configures app service plan. However, scaling depends on the type of scale parameter selected and how the details of any selected scale parameter are specified.

In this section, I will shed some insight on how to properly do this so as to make your application hosted by your configured app service, always available and scale efficiently and effectively. Scaling by Azure is done using Instance Count. There are two major ways this can be done:

1. Scaling to a specific instance count:

In this case you are just specifying a constant instance count for your app service e.g., 4 instance count or 7 instance count. This type of scaling is fixed, meaning your app service scales up and down within this specific value you have selected.

This type of scaling will give you a fixed consumption cost as your app service will never scale beyond the specified instance count.

The disadvantage of this however is that by the time your application increases in terms of load, and the load capacity of your application is greater than this specified instance count, your app service response time will increase tremendously making your application time out and as a result becomes inaccessible by the users of your app.

You wouldn’t want your application users to be stuck with an application that drags and takes forever to load up or function.

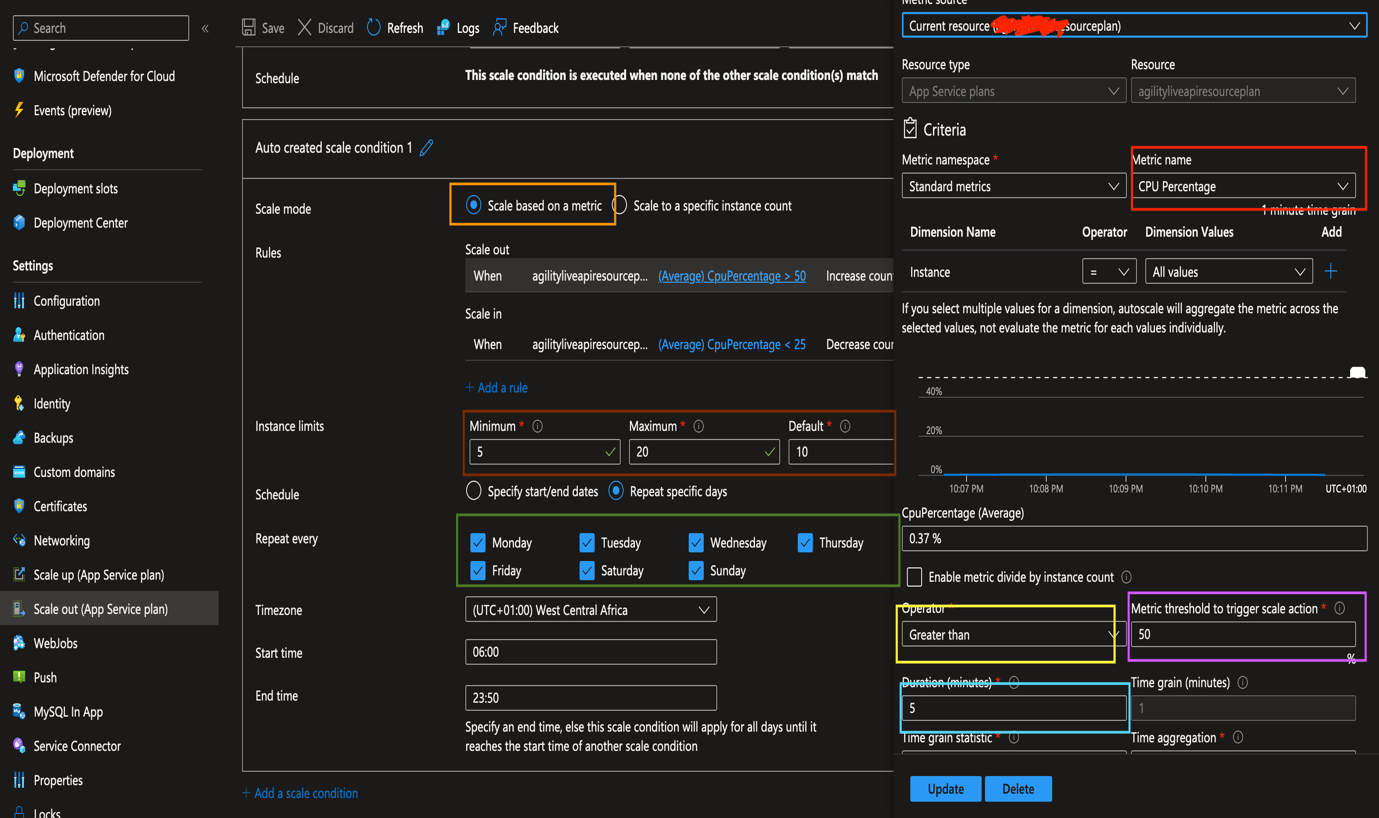

The image extract shows the specific instant count option highlighted in red. You can also generate as many scale conditions by selecting the “add scale condition button”. However, Azure will make use of the very most recently created scale condition to perform its scaling of your app service.

- Scale Based on a Metric:

In this type of scaling, you would need to specify a metric, and azure will use that metric to scale up and scale down your app service based on request and load.

When you are scaling, you are actually increasing and decreasing your instance count based on the metric you have selected and the number of requests your application is requesting from users. We will look at the best way to set a metric so as to ensure continuous availability of your application hosted by the app service.

We will look at each highlighted item and explain what it does. This will help you in determining how you want your scaling to work. Let’s get right in:

1. Minimum, Maximum, and Default:

These are used to control the way Azure will use autoscale feature to auto scale your app service. Minimum is the minimum instance count autoscale will scale to when performing the automated scaling. If you specify a minimum of 4 for example, it means that while auto scale is scaling down your instance count, when it gets to a value of 4, the scale down will stop. Maximum, as the name implies is the maximum instance count that autoscale will scale up to while doing auto scaling. Default is the instance count value that auto scale will fall back to if it has difficulty reading or interpreting your scale out or scale in conditions set. So, take for instance you have specified your scale out / scale in conditions. If AutoScale has difficulty reading it, rather than cause a scaling problem, it will default to your Default scaling condition set.

2. Scale Out and Scale In:

Scale out and Scale in are metric conditions such that when set, autoscale uses it to scale out or scale in your instance count. This is how Azure Autoscale does it auto scaling exactly. To set up Scale out or Scale in conditions, you need to consider the metrics as highlighted in the screen example above. To scale out, means to increase the instance count for every increase in load or request from your application. Scale In on the other hand means to decrease instant count due to a decrease in load or request from your application.To perform scale out or scale in, the following metrics needs to be set:

a. Metric Name: Metric name speaks to the particular metric you

want autoscale to monitor and update based on conditions set. Some examples of metric name, includes Average CPU, Data Out, Data In, etc. You can select a particular metric mane from the drop down. I normally use Average CPU, as this is the metric that directly estimates the request load from my application.

b. Operator: Operator actually determines if a condition is a scale out or scale in. Some common operators are greater than (>), less than (<), equals (=). When you specify greater than operator, you are automatically performing a scale up and vice-versa.

c. Metric Threshold:

The metric threshold is basically the trigger of the scale action. Irrespective of the scale type (Scale out or Scale In), the threshold is what triggers the scale action to occur and auto scale your app service. For example, if you specify a threshold of 50, and you selected a metric name of Average CPU and an operator of Greater than (>). What you are telling Autoscale is this;

When the Average CPU of my app service is greater than 50, scale up my app service by a particular instance count.

e. Duration:

Duration speaks to the time in history autoscale will look back to get performance statistics of your selected metrics. For example, if you selected a metric name of Average CPU, and a duration of 5 minutes, you are telling autoscale to get the average cpu of your app service in the last 5 minutes.

f. Time Grain Statistics:

This speaks to the particular time grain you want to be used in your statistical analysis of performance of your app service in the duration selected. There are different options like Average, Maximum, Minimum, Count, etc. If you select Maximum and you select a metric name of CPU percentage, and a duration of 5 minutes, you are telling autoscale to check for the MAXIMUM CPU Percentage of your app service in the last 5 minutes

Action:

Action speaks to the particular action you want auto scale to perform when it has satisfied all other metric condition you specified from a – e above. It does this using Operation, Instance count, and cool down time. Operationspeaks to what autoscale should do, increase count or decrease count, increase percentage, decrease percentage, etc.

Instance count allows you specify the number of instance count. cool down speaks to the time you want auto scale to cool down before performing another autoscale. If you specify a cool down of 5 minutes, you are saying that if auto scale just performed a scale, it should wait for another 5 minutes before it does another one.

CONCLUSION:

I may not have touched every bit of autoscaling, but I believe that with what the few write ups I have done, you can successfully auto scale your app service without having down times in your application. Feel free to contact me if you need further clarification. I am available via these channels:

Ehioze Iweka

Email [email protected],

Twitter (ehis_iweka)

LinkedIn: www.linkedin.com/in/ehiozeiweka.