Snapchat to introduce family safety tools to protect minors using its app

Following moves by major tech platforms to better protect minors using their platforms, Snapchat is preparing to introduce its own set of “family engagement” tools in the coming months.

Snap CEO Evan Spiegel teased the planned offering during an interview at the WSJ Tech Live conference this week, where he explained that the new product will essentially function as a family center that gives parents better visibility into how teens are using its service and provide privacy controls.

Spiegel stressed Snapchat’s more private nature as a tool for communicating with friends, noting that Snapchat user profiles were already private by default — something that differentiated it from some social media rivals until recently.

“I think the entire way this service is constructed really promotes a safe experience regardless of what age you are, but we never market our service to people under the age of 13,” he said, then added Snap is now working on new features that would allow parents to feel more comfortable with the app.

“I think that at least helps start a conversation between young people and their parents about what they’re experiencing on our service,” Spiegel said. These types of conversations can be a learning experience for both parents and teens alike and can give parents the opportunity to guide their teens through some of the difficulties that come with using social media — like how to navigate uncomfortable situations, such as what to do if a stranger contacts you, for example.

Snap in June had shared this sort of work was on its roadmap, when parents who lost their son to a drug overdose were advocating for the company to work with third-party parental control software applications. At the time, Snap said that it was being careful about sharing private user data with third parties and that it was looking into developing its own parental controls, as a solution. (Recently, the company released tools to crack down on illicit drug sales in Snapchat to address this particular issue.)

Reached for comment on Spiegel’s remarks at the WSJ event, a Snap spokesperson confirmed the new family engagement tools will combine both an educational component as well as tools meant to be used by parents.

“Our overall goal is to help educate and empower young people to make the right choices to enhance their online safety and to help parents be partners with their kids in navigating the digital world,” a spokesperson said.

“When we build new products or features, we try to do it in a way that reflects natural human behaviors and relationships — and the parental tools we are developing are meant to give parents better insights to help protect their kids, in ways that don’t compromise their privacy or data security, are legally compliant and offered at no charge to families within Snapchat,” they added.

The company said it looked forward to sharing more details about the family tools “soon.”

The parental controls will be under the purview of Snap’s new global head of Platform Safety, Jacqueline Beauchere, who recently joined the company from Microsoft, where she had served as chief online safety officer.

Her hiring comes at a time when regulatory scrutiny of social media companies and Big Tech, in general, has heated up.

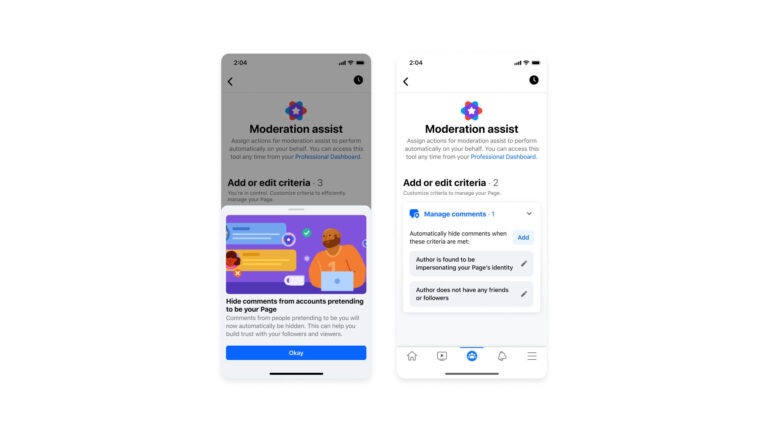

As U.S. lawmakers consider legislation that would require tech companies to implement new safeguards to better protect minors on their services, tech companies have been trying to get ahead of the coming crackdown by putting into place their own interpretations of those rules now.

Already, many of the top tech platforms used by teens have implemented parental controls or have adjusted their default settings to be more private or have done both.

TikTok, for example, having just put a multimillion dollar FTC fine behind it for its children’s privacy violations, led the way with the introduction of its “Family Safety Mode” feature in 2020. Those tools became globally available in spring 2020. Then, at the start of this year, TikTok announced it would change the privacy settings and defaults for all its users under the age of 18.

In March, Instagram followed with new teen safety tools of its own, then changed its default settings for minors later in the summer in addition to restricting ad targeting. Google, meanwhile, launched new parental controls on YouTube in February, then further increased its minor protections across Search, YouTube and other platforms in August. This also included making the default settings more private and limiting ad targeting.

Snap, however, had not yet made any similar moves, despite the fact that the company regularly touts how much its app is used by the younger demographic. Currently, Snap says its app reaches 90% of 13- to 24-year-olds in the U.S. — a percentage that’s remained fairly consistent over the past few years.

The company, however, is not against the idea of additional legal requirements in the area of minor protections.

Spiegel, like other tech execs have lately, agreed some regulation may be necessary. But he cautioned that regulation isn’t a panacea for the ills Big Tech has wrought.

“I think the important point to make is that regulation is not a substitute for moral responsibility and for business practices that support the health and well-being of your community — because regulation just happens far too late,” Spiegel said. “So, I think regulation certainly may be necessary in some of these areas — I think other countries have made strides in that regard — but again, unless businesses are proactively promoting the health and well-being of their community, regulators are always going to be playing catch up,” he said.