Facebook is testing News Feed controls that will allow users to see fewer posts from groups and pages.

Facebook announced on Thursday that it is conducting a test that will give users a smidgeon more control over what they see on the platform.

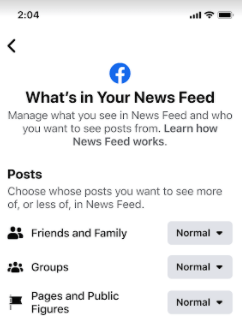

The test will be available for English-speaking users on Facebook’s app. It incorporates three sub-menus into Facebook’s menu for controlling what appears in the News Feed: friends and family, groups and pages, and public figures. Users in the test can keep the ratio of those posts in their feed at “normal” or change it to more or less based on their preferences.

Anyone taking the test can do the same for topics, marking what they want to see and what they don’t want to see. Facebook says in a blog post that the test will affect “a small percentage of people” around the world before gradually expanding over the next few weeks.

Anyone taking the test can do the same for topics, marking what they want to see and what they don’t want to see. Facebook says in a blog post that the test will affect “a small percentage of people” around the world before gradually expanding over the next few weeks.

Facebook will also expand a tool that allows advertisers to exclude their content from specific topic domains, such as “news and politics,” “social issues,” and “crime and tragedy.” “When an advertiser chooses one or more topics, their ad will not be delivered to people who have recently engaged with those topics in their News Feed,” the company explained in a blog post.

The algorithms on Facebook are notorious for promoting inflammatory content and dangerous misinformation. Given this, Facebook — and its newly renamed parent company Meta — is facing increasing regulatory pressure to clean up the platform and make its practices more transparent. As Congress considers solutions to give users more control over what they see and remove some of the opacity surrounding algorithmic content, Facebook is likely hoping that there is still time to self-regulate.

Last month, Facebook whistleblower Frances Haugen testified before Congress about how Facebook’s opaque algorithms can be dangerous, particularly in countries outside the company’s most scrutinized markets.

Even in the United States and Europe, the company’s decision to prioritize engagement in its News Feed ranking systems allowed divisive content and politically inflammatory posts to skyrocket.

“One of the consequences of how Facebook picks out that content today is that it’s optimizing for content that gets engagement, or reaction,” Haugen said last month on “60 Minutes.” “However, its own research shows that content that is hateful, divisive, or polarizing is more likely to incite anger than other emotions.”